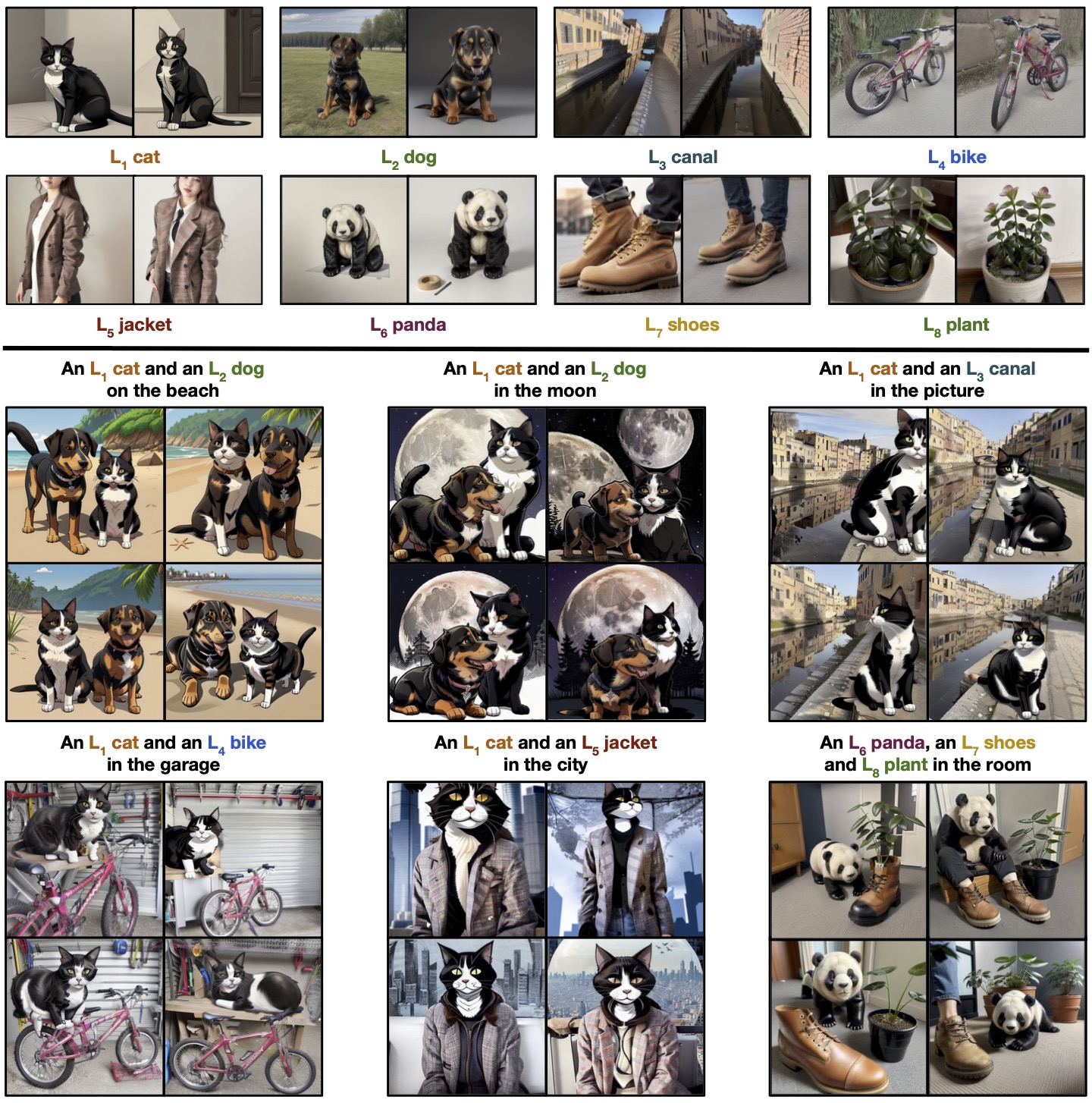

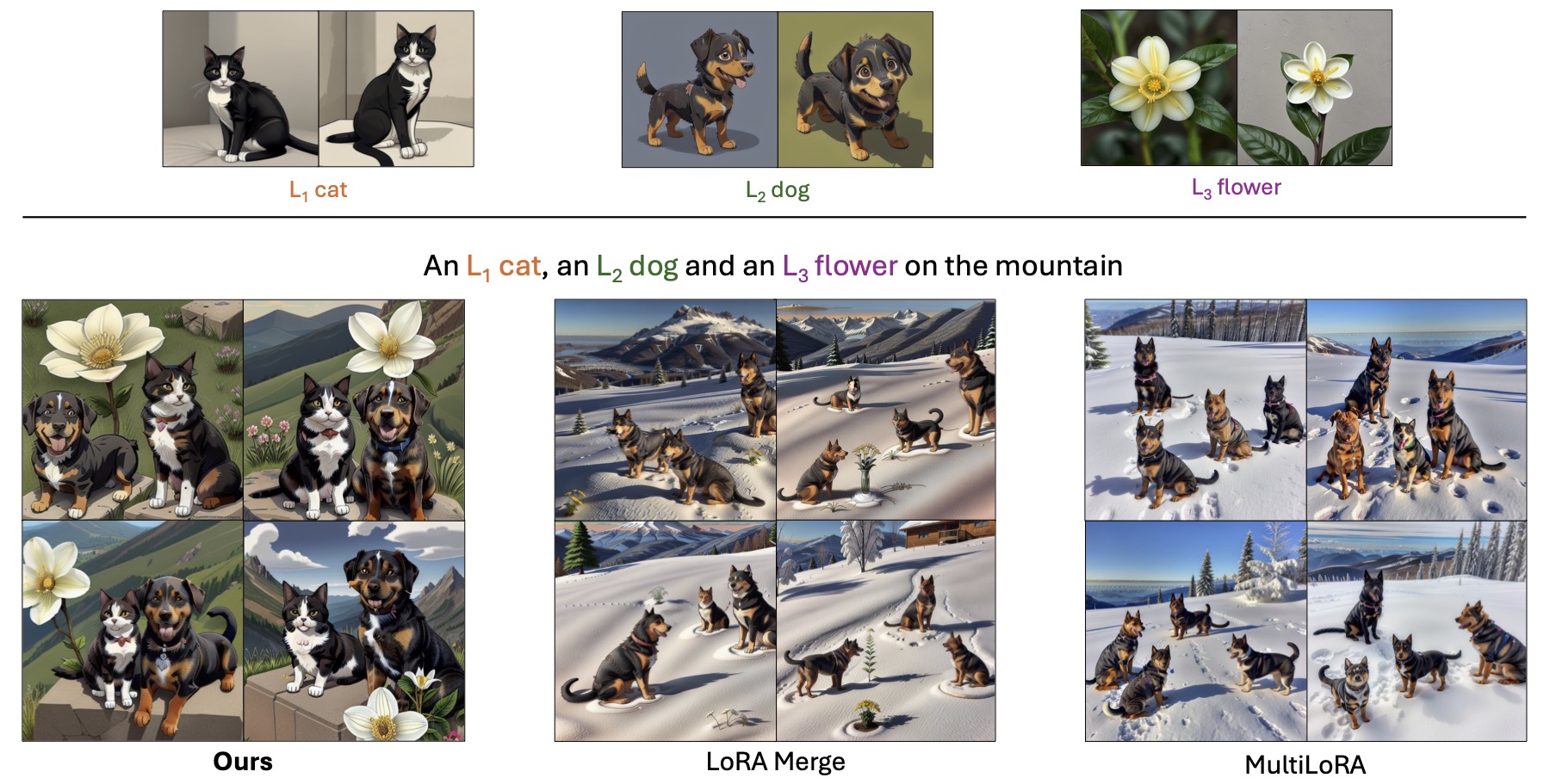

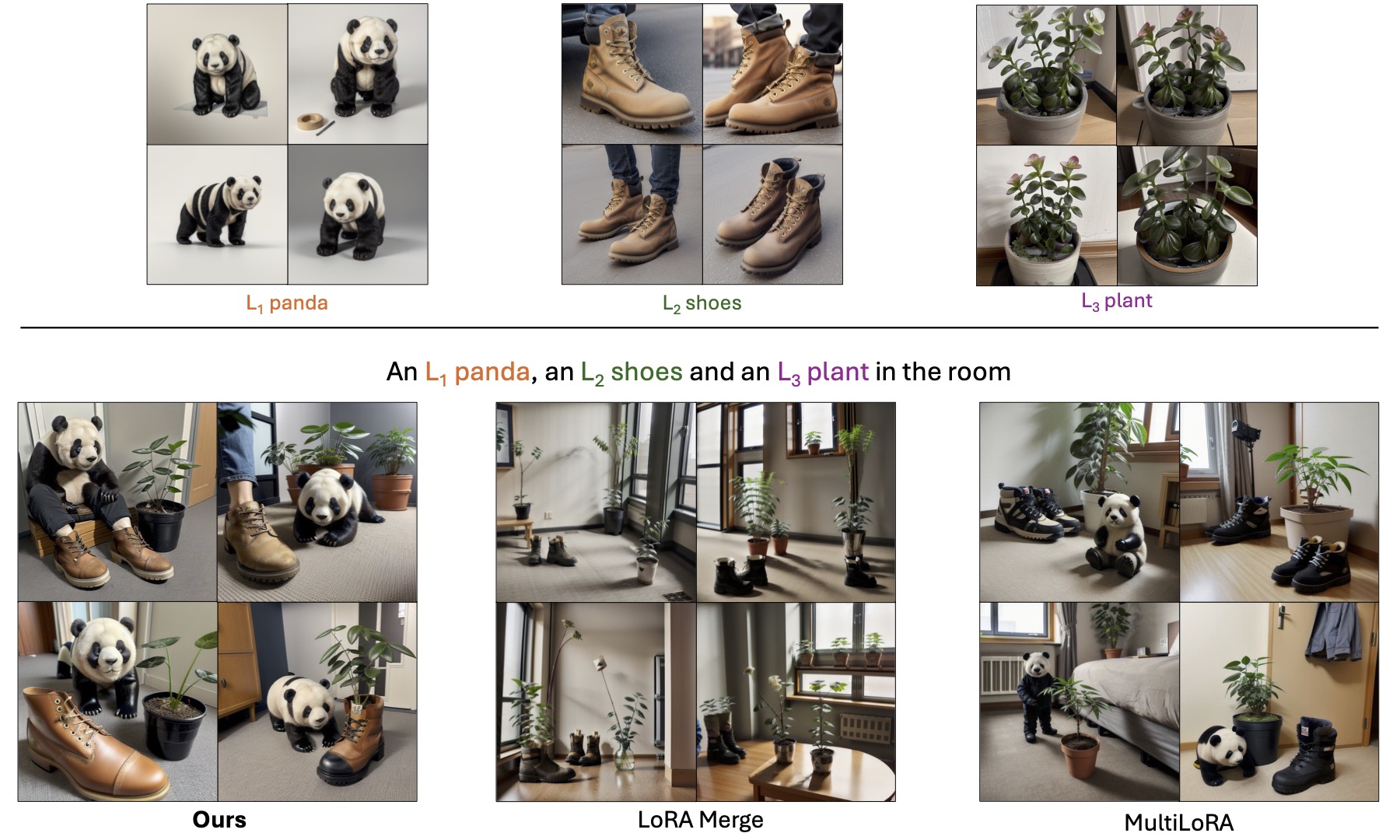

Low-Rank Adaptations (LoRAs) have emerged as a powerful and popular technique in the field of image generation, offering a highly effective way to adapt and refine pre-trained deep learning models for specific tasks without the need for comprehensive retraining. By employing pre-trained LoRA models, such as those representing a specific cat and a particular dog, the objective is to generate an image that faithfully embodies both animals as defined by the LoRAs. However, the task of seamlessly blending multiple concept LoRAs to capture a variety of concepts in one image proves to be a significant challenge. Common approaches often fall short, primarily because the attention mechanisms within different LoRA models overlap, leading to scenarios where one concept may be completely ignored (e.g., omitting the dog) or where concepts are incorrectly combined (e.g., producing an image of two cats instead of one cat and one dog). To overcome these issues, CLoRA addresses them by updating the attention maps of multiple LoRA models and leveraging them to create semantic masks that facilitate the fusion of latent representations. Our method enables the creation of composite images that truly reflect the characteristics of each LoRA, successfully merging multiple concepts or styles. Our comprehensive evaluations, both qualitative and quantitative, demonstrate that our approach outperforms existing methodologies, marking a significant advancement in the field of image generation with LoRAs. Furthermore, we share our source code, benchmark dataset, and trained LoRA models to promote further research on this topic.

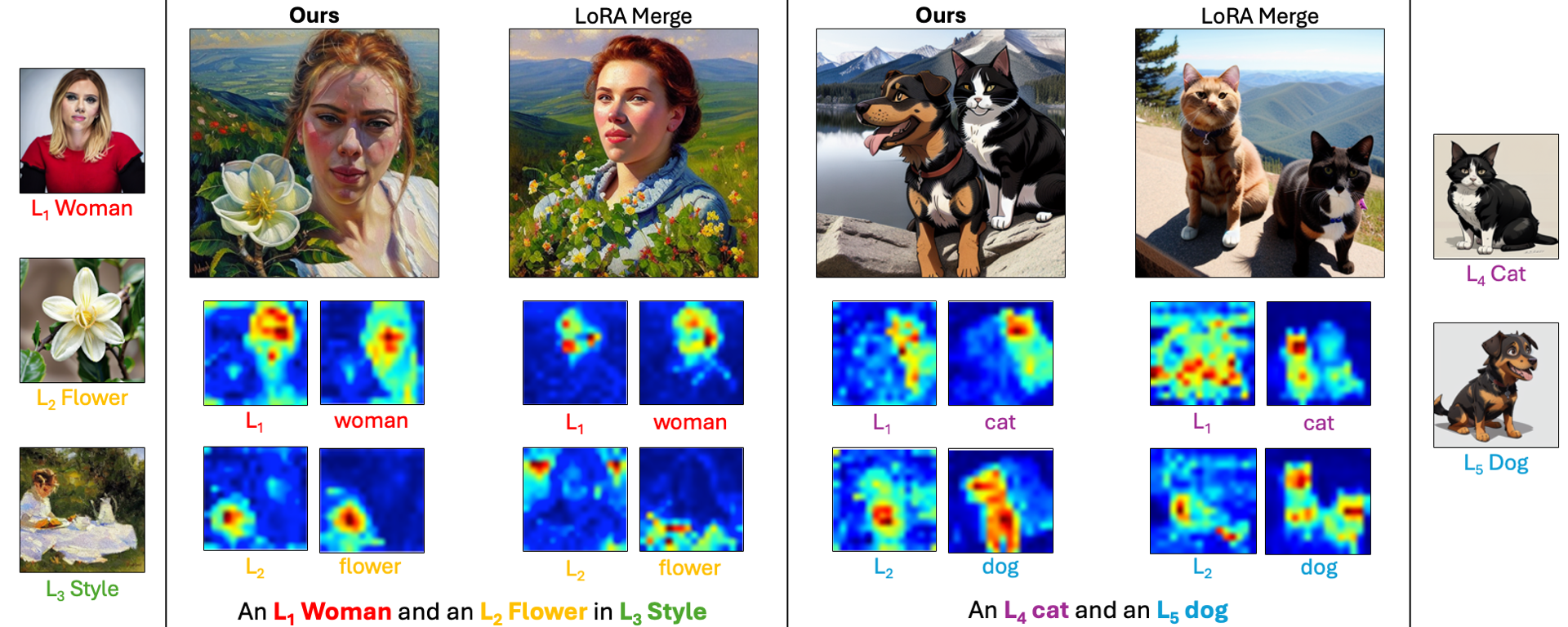

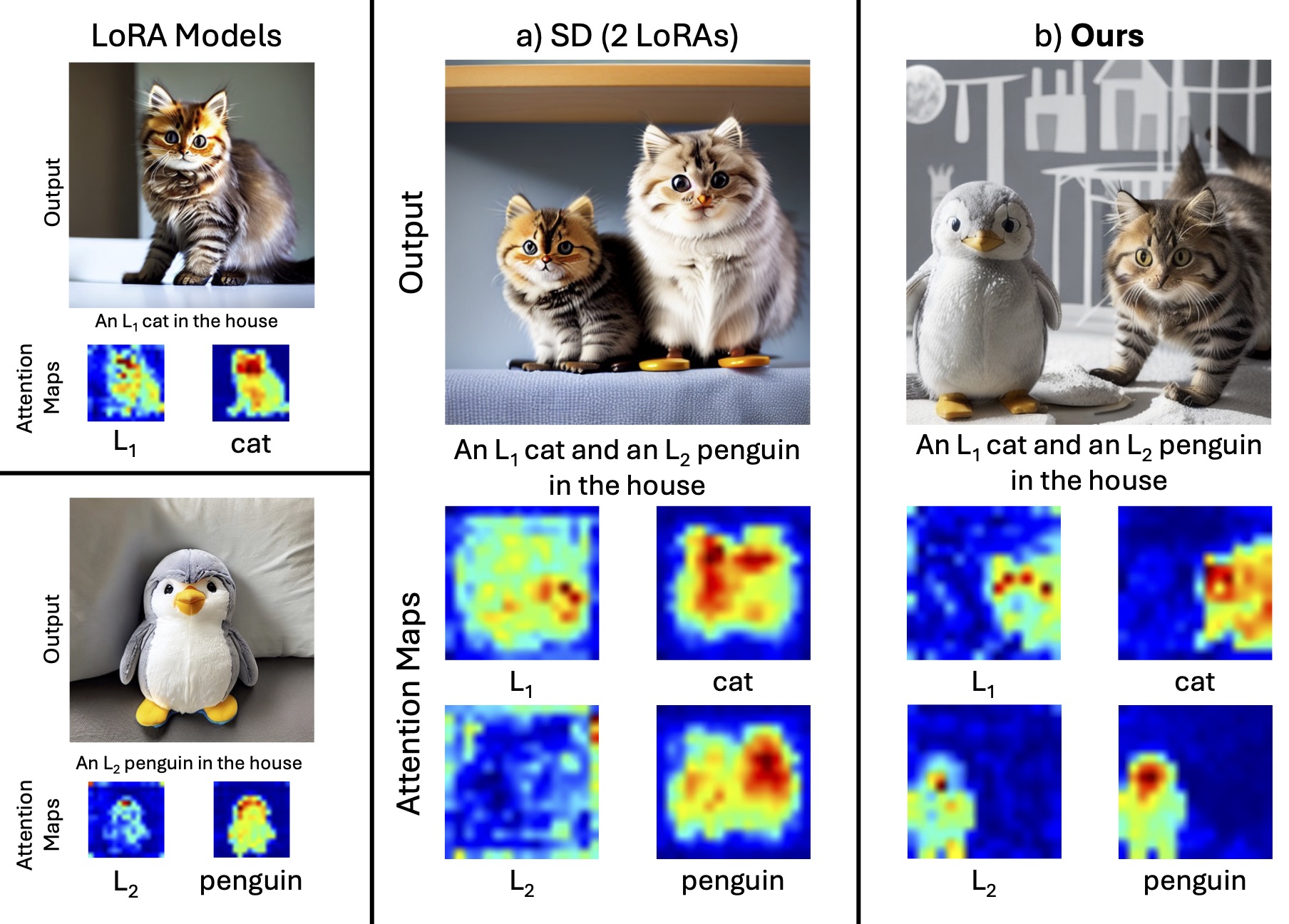

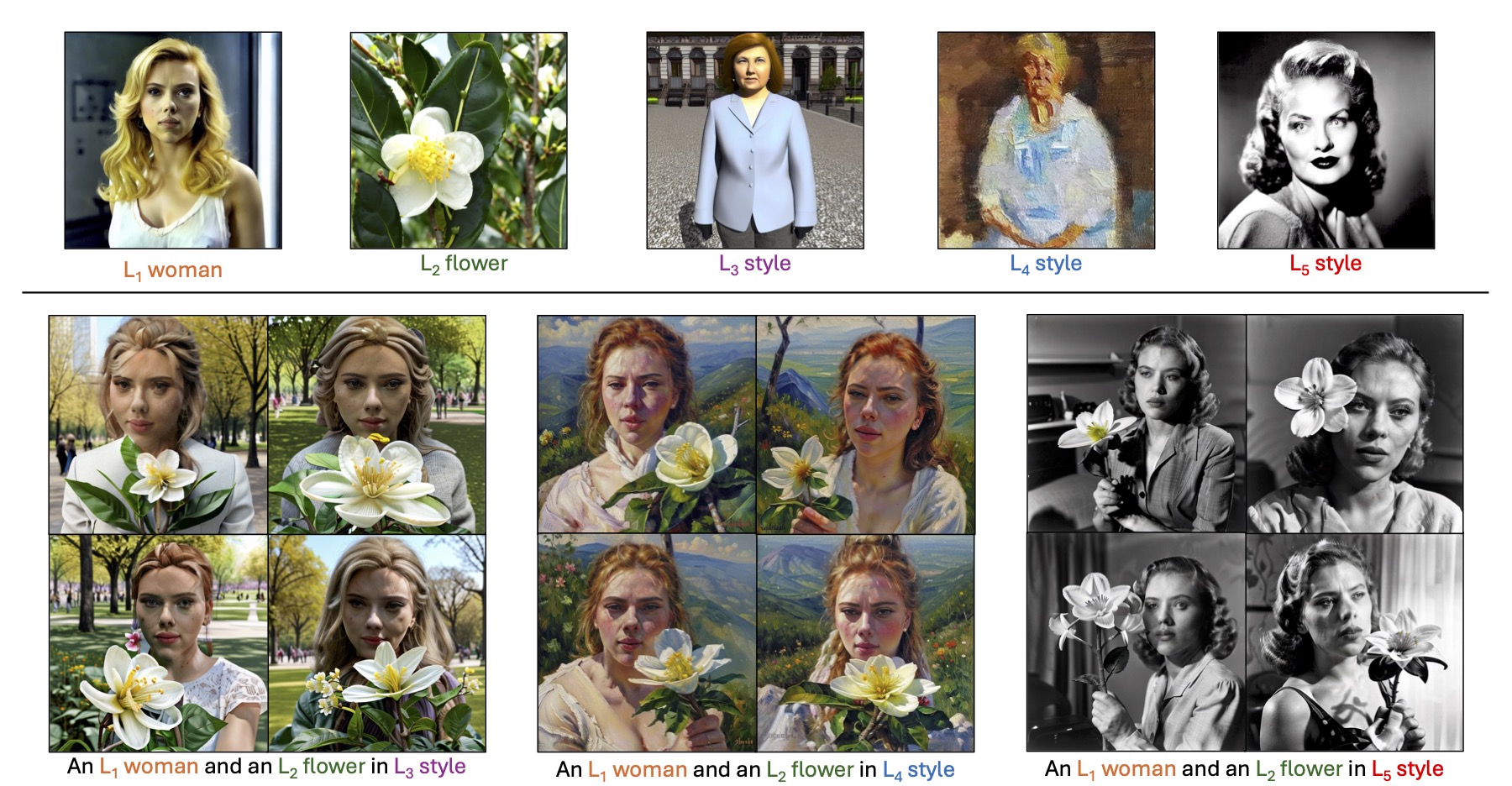

Attention overlap and attribute binding issues in merging multiple LoRA models (a). The integration of multiple LoRA approaches often results in the failure to accurately represent both concepts, as their attention mechanisms tend to overlap. Our technique, however, adjusts the attention maps during test-time to distinctly segregate different LoRA models, thereby producing compositions that accurately reflect the intended concepts (b).

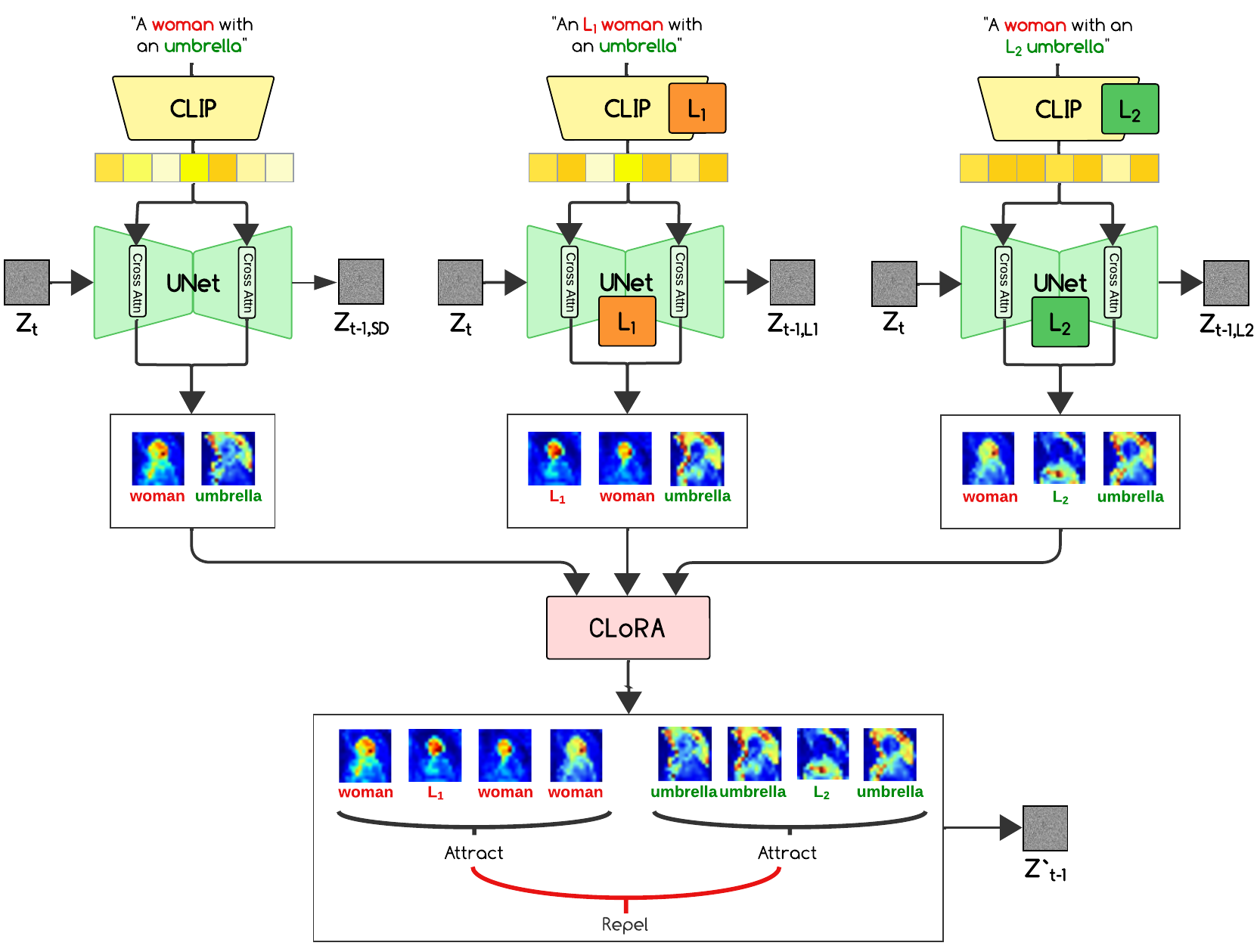

Illustration of our method, CLoRA, that combines LoRAs (Low-Rank Adaptation models) using attention maps to guide Stable Diffusion image generation with user-defined concepts. The process involves prompt breakdown, attention-guided diffusion, and contrastive loss for consistency.

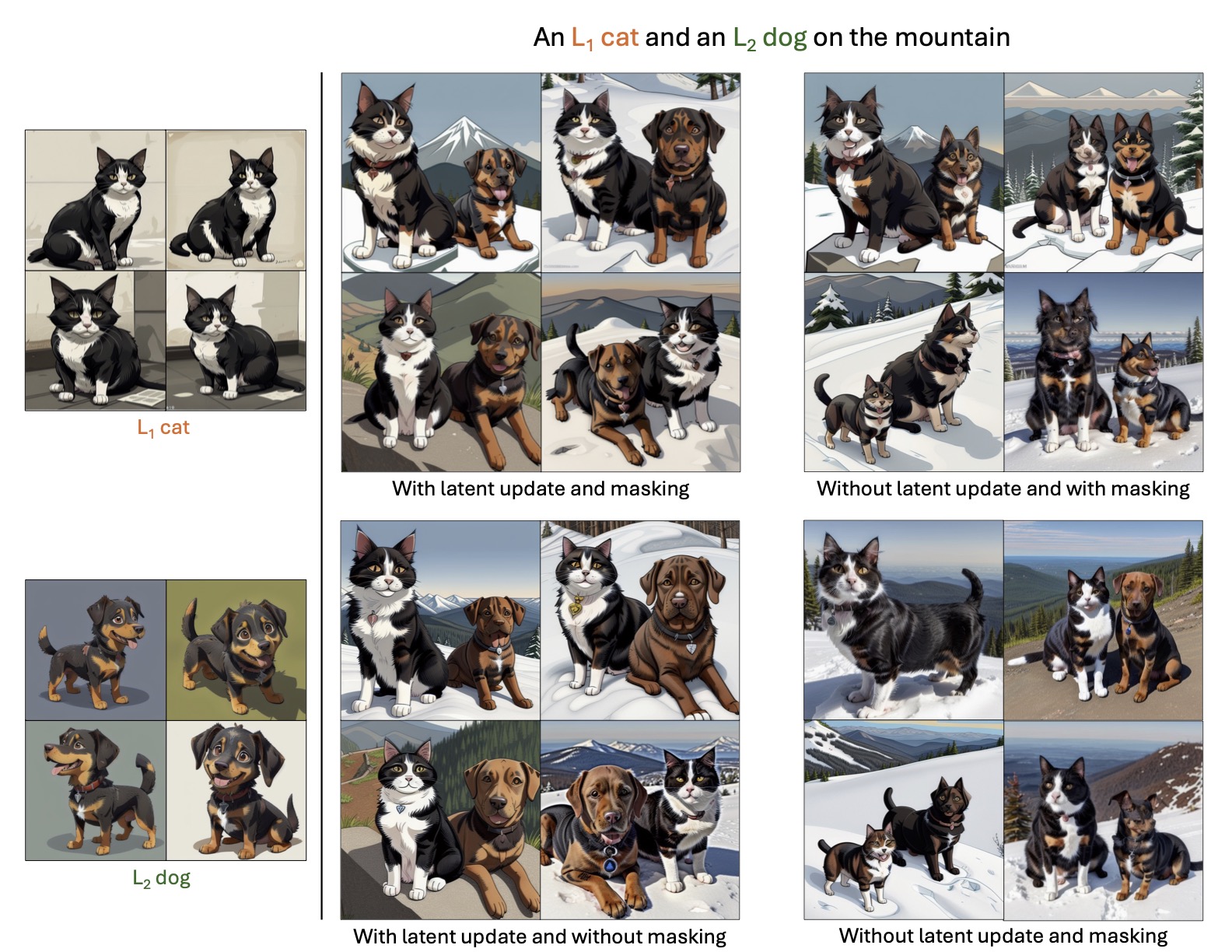

CLoRA Ablation Study. Using the L1 cat and L2 dog LoRAs, the effects of two techniques (latent update and latent masking) can be observed.

@InProceedings{Meral_2025_ICCV,

author = {Meral, Tuna Han Salih and Simsar, Enis and Tombari, Federico and Yanardag, Pinar},

title = {Contrastive Test-Time Composition of Multiple LoRA Models for Image Generation},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2025},

pages = {18090-18100}

}